Using AI to Improve Our Decisions: A Virtuous Cycle

The large language models can help us become better thinkers. And we have no choice but to take up that challenge.

Large language models such as ChatGPT are among the most important new technologies of our time. The big difference between artificial intelligence (AI) as we commonly know it and the new models is how we can use normal language to communicate with the latter, almost as if they were human beings, but equipped with a vast repository of knowledge.

Extending the use of AI into qualitative analysis

Until now, when it comes to management, AI has been used almost exclusively in quantitative modeling. Quantitative models require explicit, structured data of adequate quality. This limits their usefulness, for most of the information we use in the decision making process is actually qualitative, often tacit knowledge; the knowledge residing in our brains, based on experience, insights and relationships. While our quantitative modeling skills keep improving, not least with the assistance of AI, our qualitative analyses still suffer from a serious lack of structure and rigour, which negatively impacts our decisions. With the advent of large language models, this is now changing. If managers take advantage of the possibilities offered by those new models, they can vastly improve their analysis and thus their decision making.

A structured and logical approach is critical

When it comes to qualitative analysis for problem solving and strategy formulation, a wealth of frameworks are available. Some better than others. Here, I focus on the Logical Thinking Process framework, which is the one I have specialised in. This is a holistic analysis and strategy formulation framework based on two principles. First, that to effectively solve persistent systemic problems, the systemic nature of the organisation must be taken into account. Second, that effective analysis and decision making must be based on strict adherence to the rules of logic. The process consists in five steps. First, we define the system's goal and the conditions necessary to reach it. We then determine which necessary conditions are not fulfilled and identify the root causes explaining why. The third step is to solve any inherent conflicts that may have been identified at the second step. The fourth step is to map out precisely if and how the solutions identified will ensure that the organisation reaches its goal, if further actions are needed and if the proposed solutions may have unintended consequences. The fifth step is to dive deeper into precisely how the proposed and verified solutions can be implemented.

The Logical Thinking Process relies on strict adherence to the rules of logic, and this is in fact what makes it both more challenging than most qualitative analysis frameworksand the key to ensuring that the analysis is valid. Without adherence to those rules, cause-effect analysis is likely to result in invalid conclusions that simply confirm our preconceived ideas, in other words, our bias.

Improving quality and saving time

Historically, qualitative analysis has largely been based on gut feeling rather than a formal systemic approach. All it takes is a quick look at a few corporate statements of vision, mission and values (look out for contradictions), a couple of SWOT analyses (look out for overconfidence), high-level strategic plans (look out for wishful thinking), and big project plans (look out for unrealistic deadlines). In short, once we move into a realm where data and numbers are missing, we frequently end up with contradictory and often meaningless gibberish. The same applies when it comes to day-to-day decisions, wherein the hard numbers are missing and we have to rely on qualitative analysis.

In short, where really rigorous cause-effect analysis is most needed, it is usually omitted. There are two main reasons for this. The first is the lack of a truly structured logical approach. The second reason is that making use of a structured logical approach is so intellectually challenging and time-consuming that most of us never use it, even if we know how to. Which again means the value of our qualitative analyses leaves a lot to be desired.

Laying out a root cause analysis doesn't have to take a lot of time for someone trained in doing it. Apart from the actual fact-finding, the truly time-consuming part of the work is scrutinising the analysis—looking for missing premises behind conclusions, validating logical connections, and clarifying the wording of our statements. The usual approach here is to first do the analysis, then set it aside for a couple of days, review it, have a colleague scrutinise it, then present it to the team for review, and so on.

This is where large language models will be a game changer. We can now sketch out a rough analysis, type into the AI the logical statements we want verified, and get an instant response. We do not have to limit ourselves to individual statements. Using the correct form, we can also insert the whole sequence of logical statements, have the model check it and suggest improvements, clarify where we believe it misunderstands us, ask it to clarify our wording when needed, and so on. What we are really doing is partnering with the large language model to produce a robust analysis in a fraction of the time it would otherwise take.

When we consider the extent to which qualitative analysis impacts our decision making, the lack of structure and quality which is far too common, and the time and effort required to take it to the next level, we should immediately realise the game changing nature of AI-assisted qualitative analysis.

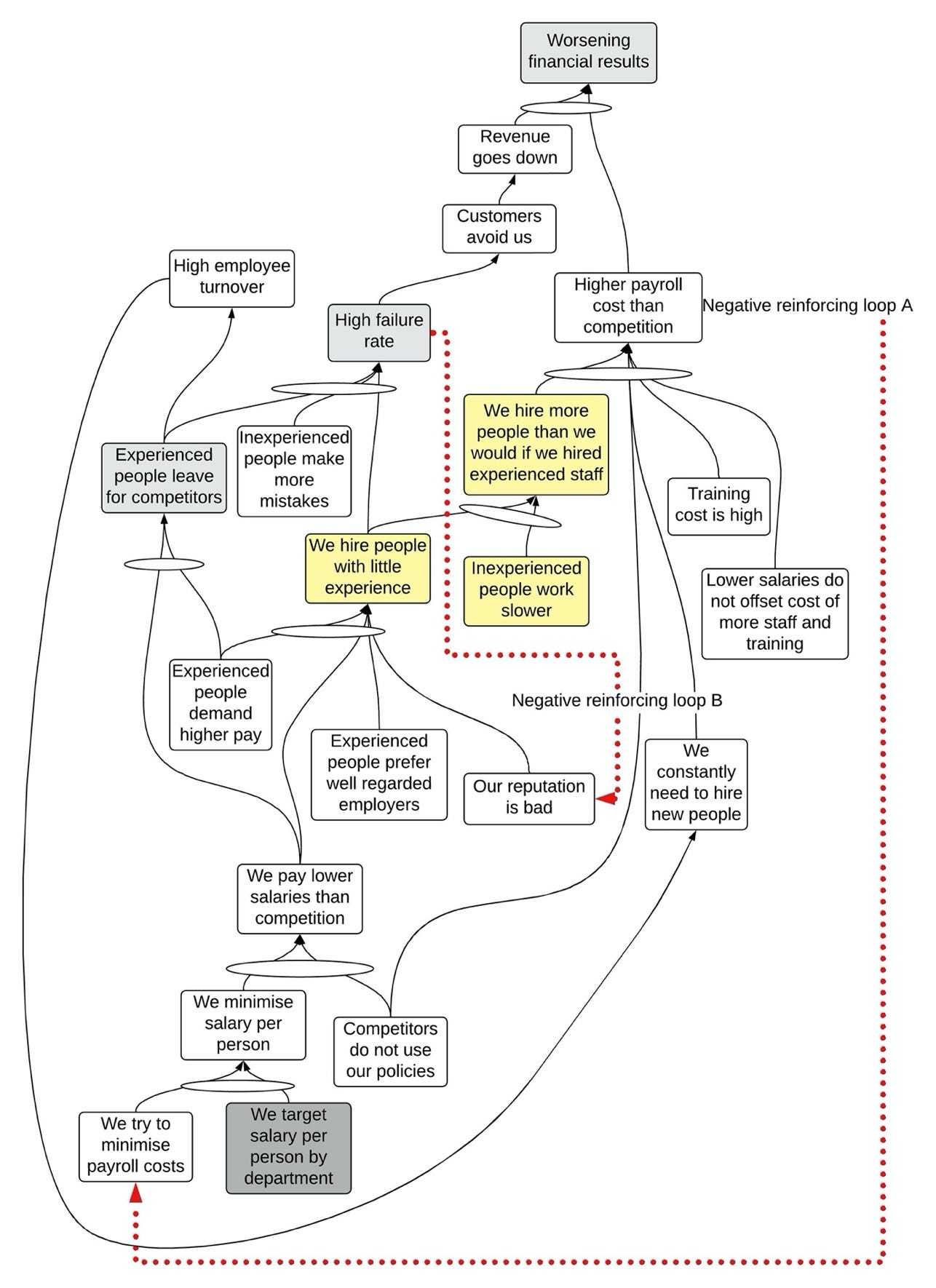

A Current Reality Tree example: Worsening financial results

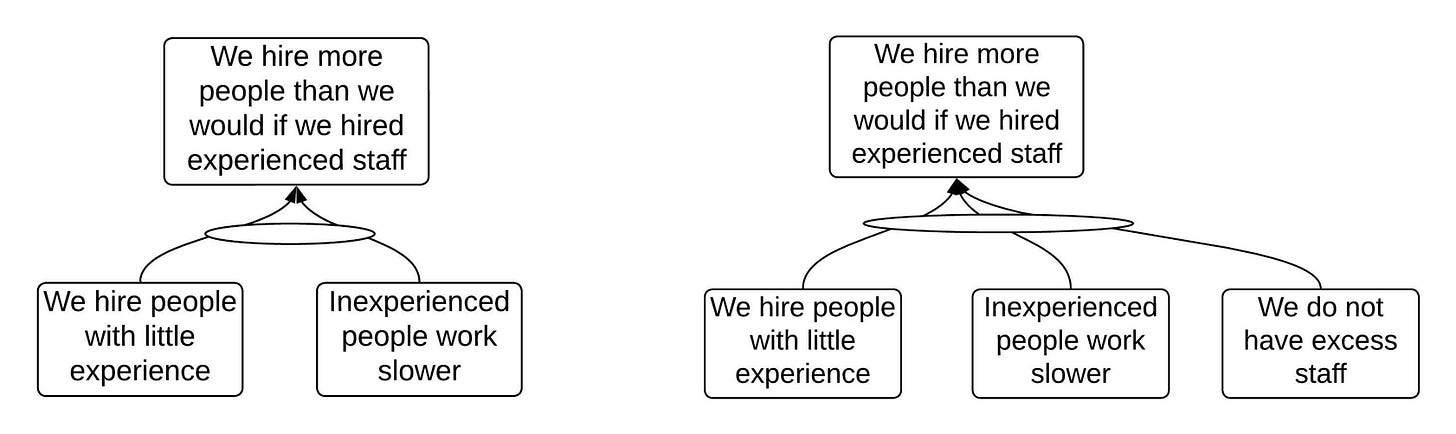

The diagram above shows a logical analysis, a Current Reality Tree, of how a faulty KPI results in worsening financial results at a specialised hospital. Focusing on one part of the analysis, coloured yellow, we ask Chat GPT to evaluate if the premises are sufficient to produce the result.

In a couple of seconds, the model identifies a missing premise: In order to conclude that we have to hire more people, we must add the premise that we do not already have excess staff. We see the original syllogism to the left, and on the right we have the one improved based on the input from Chat GPT. This additional premise is in fact critical. If our assumption of not having excess staff is indeed faulty, this may in fact be the critical root cause behind the hiring spree.

We might well say that this should have been obvious the whole time, but the fact is that when we qualitatively analyse a situation, we always make unstated assumptions, and very often we do so unknowingly. We are always biased to some extent to assume things we do not really know. The language model provides us with "external eyes"; it acts as an unbiased observer with an excellent ability to scrutinise logical statements.

We must become better at logical thinking

AI will not think for us. Believing that it will is the most dangerous trap its users can fall into. The information contained in the models is far from perfect. The models can easily misunderstand our input, in which case the old rule "garbage in, garbage out" applies. The models do not possess the relevant subject-matter knowledge, thus their suggestions may often be wrong or irrelevant. This is why we cannot outsource our thinking to AI; it is no silver bullet. But those models are here to stay, and just as with any new, important and useful technology, we must take advantage of the possibilities they offer. If we don't, we risk being left behind.

The large language models can help us become better thinkers. And at the same time, the advent of those models in fact requires us to become much better thinkers. We can and must use them to improve our own analytical skills, and the better those skills become, the better we become at using the models to improve our skills even further. This is the virtuous cycle offered by the new large language models. But we must keep in mind that to take advantage of this promising new tool, we must know and understand the rules of logic. And we must be skilled (through training) in applying a structured approach for decision making and analysis.

Managers must take up this challenge and embrace the possibilities that large language models offer. The ability to use this new technology effectively, and thereby enter the virtuous cycle of constantly improving our ability to think logically, may become the most important key to success in management, and it may happen much sooner than most of us realise today.

ATTENTION: On June 26th, Philip Marris and I will host a webinar on the use of AI to improve structured logical analysis. The webinar is offered by Marris Consulting in Paris. Follow this link for a free registration.

I am not competent enough to judge the risks associated with the current rapid development of A.I. But I very much like the idea that Thorsteinn is developing of working out how A.I. can help us become better thinkers.

P.S. Don't miss the webinar on this subject on the 26th of June!

https://www.marris-consulting.com/en/conferences/webinar-artificial-intelligence-and-logical-thinking-process

Hi, Thorsteinn. This is a great piece highlighting how ChatGPT can help us find flaws in our own thinking, rather than replacing that thinking. Question: I'm assuming you can't input a logic tree into ChatGPT. So I'm curious. What your prompt was to test your logic assumptions? I tried those three entities you suggested myself in ChatGPT and got a different response.