Invisible Friends, Trojan Horses and Conflicts

Most organizations operate within a relatively predictable environment. It will be fundamentally disrupted by Large Language Models. Are we up to the challenge?

Jackie recently completed her master's degree in sociology and joined a large retail company working in data analysis and reporting. Despite lacking prior work experience, she quickly mastered her responsibilities, considerably faster than the latest new recruit hired the previous year.

The Invisible Friend

Jackie has an invisible friend who doesn't work at the company but interacts with her solely via her computer and phone. Nevertheless, this friend possesses extensive knowledge about her tasks. Whenever she encounters unfamiliar work, she consults him, and he swiftly guides her. He quickly completes tasks that would otherwise take days, often finishing them in just minutes. Jackie keeps finding out more and more about her invisible friend's capabilities and limitations.

Eric, Jackie’s colleague, has been with the company for seven years. He has no invisible friend, making Jackie significantly more efficient despite her shorter tenure.

Jackie’s invisible friend is an LLM (Large Language Model) she subscribes to personally. Eric isn’t opposed to using AI but feels uncomfortable paying for a subscription himself, believing the company should cover the cost. However, the CEO is hesitant, demanding clear demonstrations of benefit, thorough evaluations from IT, and expressing budgetary concerns.

Fast forward a year, Jackie has left the company. Her success drew attention to her, and she received a job offer from a competitor already embracing LLMs, encouraging experimentation and knowledge sharing. Although the salary was slightly better, the main motivation was recognizing stagnation at her previous workplace, observing growing dissatisfaction among colleagues, and seeing competitors leveraging LLMs to advance ahead.

Jackie has stayed in contact with Eric, who has told her that more employees are using free AI subscriptions to enhance their work. However, these free subscriptions have raised concerns about data security, resulting in a recent sensitive data leak. Consequently, the CEO has banned AI usage entirely. Meanwhile, IT continues searching for an optimal solution, complicated by the frequent updates and advancements in the field. Eric is now looking elsewhere and inquires about job openings at Jackie’s new workplace.

The Trojan Horse

In Homer's Iliad, during the siege of Troy around 1200-1300 BC, the Greeks constructed a large wooden horse filled with hidden warriors after years of an unsuccessful siege. They left it outside Troy’s gates overnight. In the morning, the Trojans brought the horse inside the city. The hidden soldiers emerged, opened the gates, and Troy was conquered.

What caused Troy’s downfall? It wasn’t the ingenuity of the Greeks. It was the Trojans' own failure to inspect what they were bringing inside. Had they been cautious, they could have captured the hidden warriors, potentially turning them into allies and utilizing their strategic expertise. Their negligence ultimately led to defeat.

Implementing LLMs is inherently experimental. AI is not traditional software, it is more accurately described as an assistant with uncertain capabilities. Companies must experiment to discover optimal LLM uses. Developing proficiency in LLM usage has already become essential. Its implementation is widespread yet varied, with effectiveness heavily dependent on clear strategies, proper investment, and explicit guidelines.

Even without official endorsement, workplaces naturally become testing grounds. Proactive employees independently use free or self-funded LLM subscriptions, mastering these tools on their own.

Performance differences quickly emerge between LLM users and non-users. Some excel, others lag behind. Managers sometimes attempt to restrict LLM usage, prompting covert use. Some avoid them entirely due to uncertainty or fear of violating implicit rules. Distrust grows among employees and towards management, damaging workplace culture and competitiveness.

AI can be likened to the Greeks' Trojan Horse. It stands outside — probably already within — the workplace "walls." Whether it brings benefit or harm depends entirely on organizational decisions.

The Conflict

When companies fail to take action, managerial negligence or ignorance is often blamed. Yet, it is rather uncertainty and a deep seated conflict that typically prevent action:

Managers recognize genuine opportunities but desire correct decisions, suitable tool selection, clear guidelines, efficient investment, and precise prediction of technology applications.

However, optimal LLM utilization cannot be predetermined. The understanding of it can only emerge through experimentation, by testing the tools at various tasks, through ongoing learning and skill development. Given the rapid evolution of the technology, timely action is essential also.

This creates a conflict between the desire to immediately adopt the technology without precise knowledge of its best applications, and the inclination to delay adoption until it is clear what it will be used for.

Addressing this conflict requires clarifying and evaluating the underlying assumptions. For instance, what assumptions justify immediate investment to rapidly develop expertise? Are they valid? Conversely, what assumptions demand precise knowledge of technology applications to maximize outcomes? Are those valid? Once we have examined and identified invalid assumptions we can solve the conflict and move forward.

We might also see other conflicts, such as conflicts regarding cost/benefit evaluation, data security, and similar issues.

Moving from a Complicated to a Complex Environment

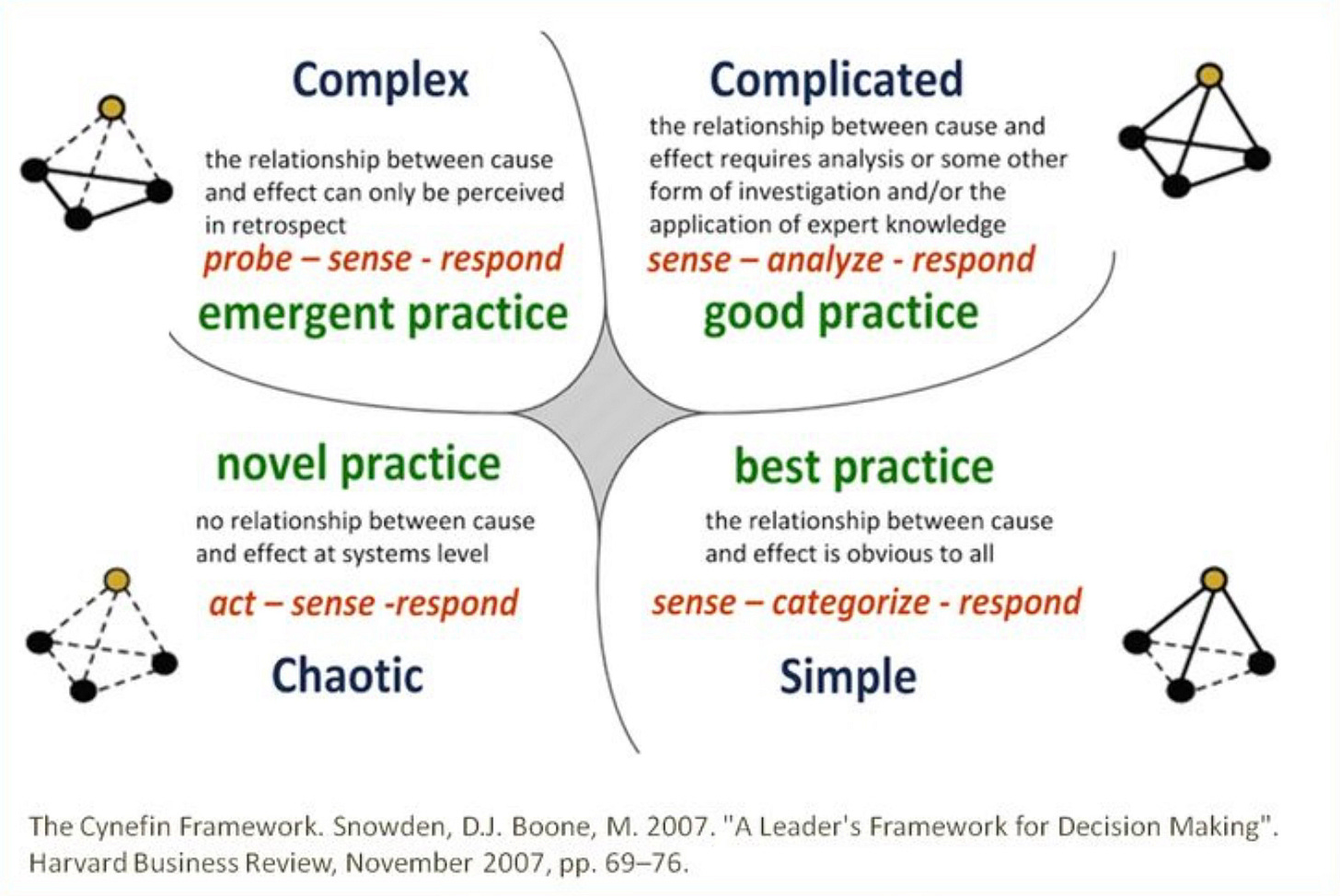

Decision-making depends on environmental complexity. Dave Snowden's Cynefin framework classifies environments into five domains: simple, complicated, complex, chaotic, and disorderly. Most corporate environments fit the simple or complicated categories, characterized by clear or identifiable cause-and-effect relationships enabling accurate predictions.

Complex environments, by contrast, involve constant change and uncertain cause-effect relationships understood only retrospectively. Managing within such environments requires ongoing testing, observation, and adaptive response.

Implementing LLMs moves organizations towards a higher degree of complexity. LLMs significantly differs from traditional software, acting more like an assistant whose full capabilities remain uncertain. Predicting optimal applications or workflow modifications based on current capabilities is difficult. The models also keep evolving, both intentionally and autonomously, which means today's optimal model may be obsolete next year. Identifying applications requires equipping employees with the right tools, encouraging experimentation, monitoring outcomes, adjusting practices, learning continuously, and responding decisively.

Are we up to the Challenge?

Most corporate environments historically feature high certainty regarding cause-effect relationships. LLM implementation disrupts this certainty. While challenging for managers accustomed to predictability, it simultaneously offers significant opportunities. Companies can become genuine learning organizations, fostering democratic knowledge acquisition and sharing, guided by leaders adept at managing in an environment of growing uncertainty.

Excellent examples and analogies, Thorsteinn. This is thought-provoking.